Nothing is changing the world as significantly or as quickly as artificial intelligence. AI predicts the weather, generates content that was once limited to human beings, and it has the capability to conduct research and analyze and interpret data. However, there are just as many concerns surrounding the evolution of AI as there is excitement.

One of the major concerns is how AI generates content based on information it gathers from the internet without properly citing its sources. The legality of AI is still up in the air, as multiple lawsuits are playing out in court., including those filed by The New York Times and numerous other publications, which are in the midst of copyright infringement lawsuits against AI companies. Even pop culture icon Taylor Swift recently chimed in on the AI debate after she was victimized by a fake AI generated photo that was posted on social media. The fake photo of Swift in an Uncle Sam outfit, which was shared by millions over multiple social media platforms, claimed that she was endorsing ex-President Donald Trump. Swift, who swiftly discredited the fake image and incorrect claim, according to Time magazine, and publicly endorsed Vice President Kamala Harris immediately following the second presidential debate on Sept. 10.

Despite concerns of legality and misinformation, as AI abilities continue to expand, human beings’ capabilities will also expand due to the efficiency and productivity Al can offer. Thus, AI can be a useful tool, but that tool can become an economic powerhouse or an economic burden, depending on how it is viewed. For example, over the next ten years, advancements in generative AI could grow the global gross domestic product, or GDP, by seven percent, which would equate to around seven trillion dollars, according to Goldman Sachs. However, the ability of generative AI to create content, a function once limited to human beings, could place an estimated three-hundred million jobs in jeopardy, Goldman Sachs reported.

A less talked about concern about AI is its impact on the environment, which also has economic effects as well. Similar to cryptocurrency mining, AI puts a massive strain on the power grid, so much so that the United States has slowed plans to close coal-fired plants so the power grid can keep up with the energy AI is using. These economic and environmental impacts are exciting and promising, but they are also dangerous at the same time, which is essentially AI in a nutshell. In no context has AI’s contradiction of itself been more intensely debated than in higher education.

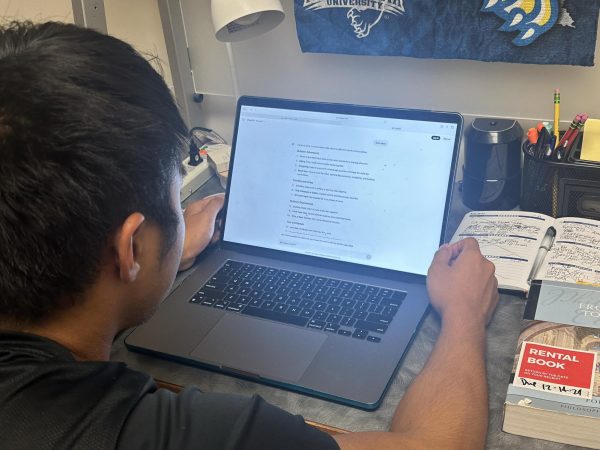

AI’s role in higher education and its effect on the job market has been feverishly debated among professors and administrators in colleges across the world. Some may argue AI’s use in higher education lies in brainstorming research, creating lesson plans, helping students organize thoughts, and administering instructional interactive learning modules. Those opposed to its use may point to how easily students can use AI to plagiarize, potential inaccuracies in the content it generates, and its negative impact on students’ critical thinking skills development.

Mass Communications and design adjunct professor Jimmy May said AI has no place in his photojournalism classroom. “You’re being true to life. As a photographer, your opinion will show in what you’re doing regardless [because] you’re a human being, but you are trying to as be fair and accurate as you can in everything you do image wise, so when you have AI interpreting [an image] into whatever it believes, [that] is not a good thing,” he explained.

May, a state and national award-winning photojournalist, who has been teaching photography at Misericordia for 18 years and is also Chief Photographer at Press Enterprise, Bloomsburg, doesn’t use AI in the real world, either. “I do a wedding or two a year, and when I talk to clients about that I always tell them, ‘You are not going to get a picture from me that is going to have the two of you in a field with a purple sky and orange lightning bolts because it’s not what happened. If that’s what happens the day you get married, then we have bigger problems than being out in a field taking pictures,’” he said.

Although plenty of professors feel the same way May does about AI in their classrooms, others are finding ways to incorporate it into their lessons. Dr. Patrick Danner, Director of the University Writing Program and Assistant Professor of English, has recently authored a policy encouraging professors to use ChatGPT and other AI programs in their classrooms. Danner believes there are multiple reasons why professors should incorporate the use of AI in their lesson plans, “One, it shows students ethical and efficient uses [of AI]. Two, I think it will dispel some of the anxiety around AI and what it can do and what students are using it for. And three, I think it’s preparing students for the future in a useful way. When [students] graduate from here [they] are not going to enter a world without ChatGPT, so we may as well take it upon ourselves to show [students] how to most effectively use it.”

Danner’s policy has been adopted by the university writing program, but he also feels the policy should be class specific and up to professors to decide whether to use it. “When I’m teaching a professional editing class, we are outlawing ChatGPT because one of the things it does well is it can look at a piece of writing, and I would estimate with 95 to 99 percent accuracy, tell you if those commas are in the right place. In that class, one of the goals is that students should be able to do that on their own,” he explained.

Like Dr. Danner, Dr. Steven Tedford, Professor of Mathematics and Chair of the Department of Mathematical Sciences, believes colleges will have to start incorporating AI training in their curriculum in some way. “I think [colleges] don’t have a choice. The colleges that are flexible and include [AI training], especially for heavy duty career-oriented majors, are going to do better than the ones that don’t,” he stated.

Even though Dr. Tedford agrees that colleges are going to have to teach AI, he also feels it needs to be introduced in primary and secondary education, “[AI training] is going to have to go younger than college. It’s going to have be taught, especially the ethical aspects of it, from elementary school and up in order for it to become something less scary,” he said.

Tedford also believes some of the anxiety surrounding AI is due to how movies in pop culture portray it. “It definitely doesn’t help all of the science fiction with robots taking over the world,” he explained.

No matter how people view the AI revolution, the bottom line is that it isn’t going anywhere. According to a 2023 business research report conducted by IBM, “AI won’t replace people—but people who use AI will replace people who don’t.”